-

SOFTWARE

SOFTWARE HCM Productivity Suite Query Manager Query Manager Add-ons Document Builder Payroll Pack Variance Monitor DSM for HCM GeoClock

-

SERVICES

SERVICES PRISM for HR & Payroll SAP SuccessFactors Integration monitoring Payroll reporting Report writing Custom development SAP BTP

- All Solutions

- Request Estimate

-

Resources

Resources Blogs Read the latest updates on SAP SLO, SAP HCM, Data & Privacy, and Cloud Events and Webinars Discover all our events and webinars from around the world Video library Watch videos and improve your SAP knowledge

- About

Ultimate guide: SAP S/4HANA data and landscape management

Paul Hammersley

Paul has for many years been a remarkable technical force at EPI-USE Labs. As Senior Vice-President of the ALM Products, his portfolio includes System Landscape Optimisation, and he has hands-on experience of implementing Data Sync Manager (DSM) and helping clients to manage data across the breadth of their SAP landscapes.

About this guide

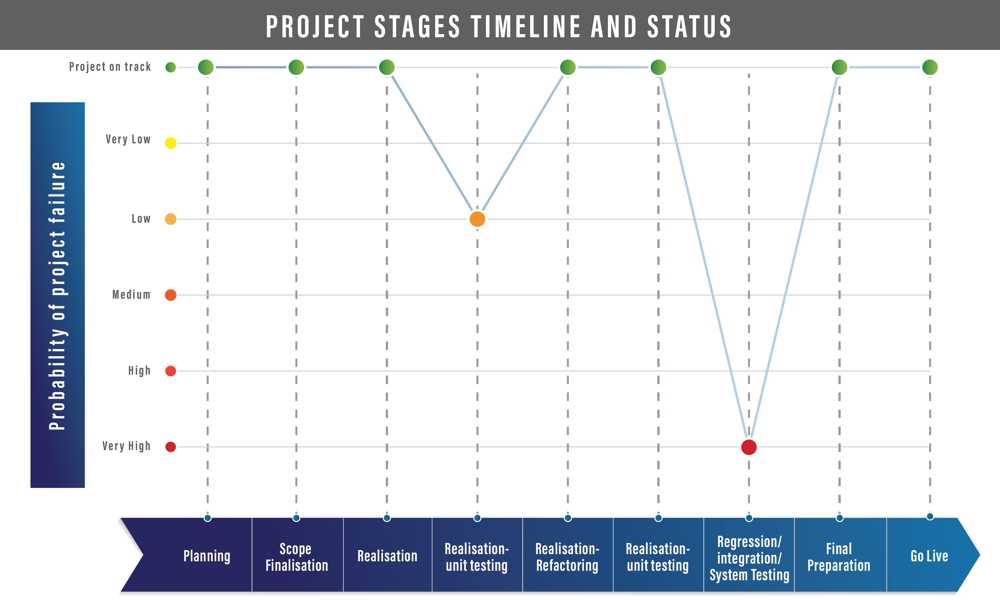

Accurate test data – in the right place, at the right time – is often the reason a project is delayed, over budget or, in extreme cases, a failed project.

S/4HANA – however you’re choosing to get there – is likely to be the biggest SAP®-related project on your horizon. Use this as an opportunity to not just address the testing landscape requirements of that project, but re-evaluate and define what data and landscape management needs will look like in the future. The ERP system that’s been the core of your business IT for many years is evolving in terms of its scope, hardware, user interface, interaction with satellite systems and its ability to support innovation. As you innovate more quickly, the effectiveness of testing becomes more and more crucial, and the quality of test data has a direct impact.

Get the right technology in your team's hands to allow agility for the non-production landscape without compromising data security and integrity. Oh, and save some money along the way by understanding how data volumes in non-production environments impact the hardware costs. Finally, if any part of your SAP estate, or data, are being left behind, find an efficient, modern solution to make the data available for compliance and queries without creating a future support nightmare by running ‘display-only’ SAP systems on ageing technology stacks.

1. Simplify your SAP S/4HANA journey with a world-class Test Data Management (TDM) solution

For any significant IT project, there are always the questions:

“Where will we do our various phases of project testing?”

“Is the data in those systems good enough?”

“Will a successful test on that data mean our project is going to be successful?”

In the SAP world, there is often a follow-on question:

“Shall we request copies of production for the project to test on?”

This is because of the rich, and sometimes verbose, data model used by SAP and the complex interrelationships between different modules and submodules, which make it incredibly difficult to build complex, accurate, testing data by hand. During my time working in and around SAP, and more specifically the Test Data Management (TDM) space, I’ve seen many organisations struggle with getting good test data, and projects being compromised as a result. This isn’t just because the project might be testing on old data that doesn’t represent the current status, but also because the expert users co-opted into the project spend a good percentage of their time searching unsuccessfully for – and then manually creating – test data, rather than actually testing. I’ve also seen that situation continue for some time until a specifically large project comes along and highlights test data as a risk to the project. The later the risk is raised, the more likely it is that project money will be thrown at the problem and full copies of production will be spun up. When the risk is raised earlier, and there is time for options to be evaluated, that’s often when a purchase of Test Data Management (TDM) software occurs. In many cases, a good product was identified long ago but a business case wasn’t successfully campaigned on, or received, depending on your point of view.

Over the years, I’ve seen a wide variety of projects fulfilling this criterion to allow us to introduce a TDM solution with a client. Now, for the first time, there is one project every single SAP ERP-running organisation is considering/planning/starting/running/recovering from/finishing (delete as appropriate): an S/4HANA project. So, this is the right time for me to dispense the information that can help you understand when, and how, a world-class TDM solution can simplify, improve and guarantee aspects of your S/4HANA journey, but also share why effective Test Data and Landscape Management is so crucial on the other side of that project.

There are three ways to get to S/4HANA:

- Greenfield – a clean installation of SAP which is then configured from scratch, perhaps with a legacy SAP system for reference but open to significant changes in configuration, enterprise structure and so on.

- Brownfield – the existing system taking through a platform migration (S/4HANA runs exclusively on the HANA database which can only run on a Linux server) and an upgrade, similar to previous ones but on a much larger scale.

- Selective Data Transitions (SDT) – a complex project to take a legacy system but exclude some parts and/or carry out translations at the same time to enable business transformations.

This guide covers all aspects of the second and third approaches. If you are embarking on a greenfield project, then please skip to the section S/4HANA Migration: During – enabling hybrid cloud options.

Please also spend the time to consider what will happen to the legacy SAP system. The Archive Central solution is an excellent way to avoid keeping a ‘display-only’ SAP system for compliance and historical data queries. There is more information on that in this blog and in the section below ‘What about legacy SAP data?’.

Throughout the guide, I will reference engines of the Data Sync Manager™ (DSM) suite of products from EPI-USE Labs. This is the market-leading SAP TDM solution. But don’t just take my word for it. Check out our client testimonials or even better, join us at one of our many User Group / INSPIRE events and hear directly from our clients.

Discover DSM Book DSM demo LEARN HOW IBEROSTAR USED DATA SYNC MANAGER FOR S/4HANA

2. SAP S/4HANA Migration: before

Landscape Management in a continually-improving S/4HANA world

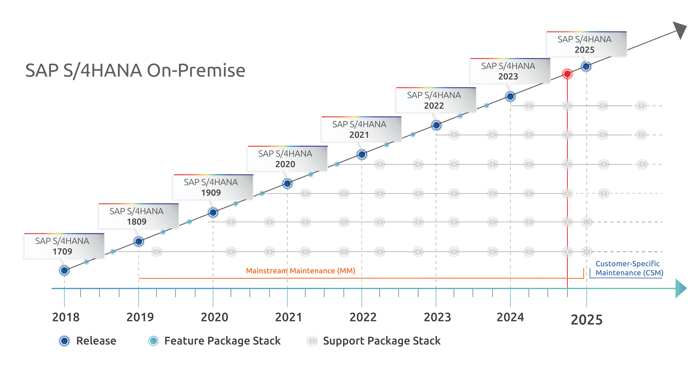

Upgrading to S/4HANA is just the first experience of a new paradigm for functionality progression with SAP S/4HANA. Once a system has been upgraded to S/4HANA, there may be compatibility packs which need to be phased out, feature packs to be installed and, soon enough, another incremental S/4HANA upgrade (such as 1709 to 2021). But let's begin at the start: what can we do before the project even kicks off?

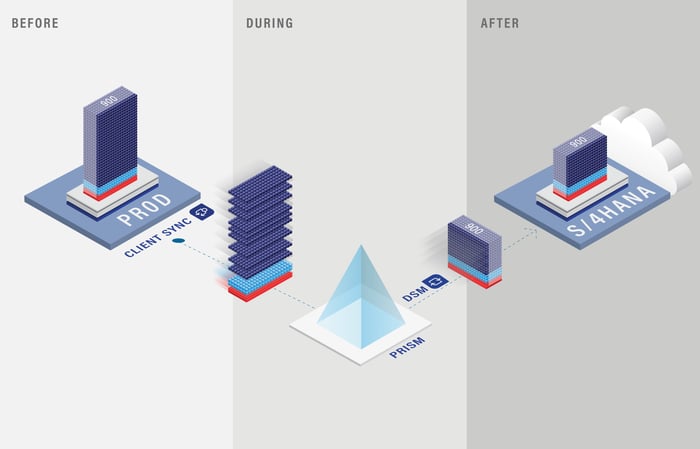

Before: rationalise the landscape before a brownfield migration

A lot of organisations have a sprawling SAP non-production landscape. Some systems are old copies of production and some multi-client systems are relics of old projects long since completed. Over the last 20 years, enterprise-grade storage has become cheaper and more manageable. As a result, SAP landscapes have been allowed to use ever more space. Knowing that S/4 is looming, with in-memory database technology being much more expensive than disk, now is the time to lose the excess baggage. And, at the same time, ensure you have good test data for every part of the S/4 project; however you choose to approach it. With the Data Sync Manager (DSM) suite you can spin up new system shells, populate them with lean clients containing masked data and use these to replace existing test systems. Alternatively, you can use the powerful client deletion capability of Client Sync™, part of the DSM suite, to remove redundant clients and delete ones you want to reset with good data. Find out how to uncover invisible SAP test data to recover costs.

Before: S/4 preparation steps

There are a number of steps that can be carried out even before your S/4 project begins, such as the Customer Vendor Integration (CVI) piece. This, and other factors, may also begin data clean-up activity. There’s no need to wait for the project to begin with this. EPI-USE Labs provides an S/4HANA Assessment which includes lots of useful information about your existing SAP environment. Three of the tiles provide detailed information on the amount of work to be done for CVI:

This can give you a good starting point to understand how much work is required in this area.

Having the capability to bring data on demand down to the development or sandbox system now could make these functional pre-projects much less time consuming and more likely to be successful in showcasing the work to be done on production. See how Object Sync™, also part of the DSM suite, works.

Before: mask sensitive data

Multiple SIs and niche consultancies may be involved in your S/4 project, and they may need to start looking at your non-production systems to provide recommendations, prepare analysis reports and scope potential projects. These organisations may be connecting from all over the globe and need to see accurate data BUT not real personal data. The number of headline-grabbing data breaches worldwide is growing: don’t risk someone downloading a table of sensitive data from a QA system and selling it to the highest bidder. Data Secure™, part of the DSM suite, gives you complete control over all sensitive data without diminishing the quality of the data for testing. Packed with delivered content and the expertise of the EPI-USE Labs’ implementation teams, you’ll soon be able to make test data available to everyone who will be involved in the S/4HANA journey.

3. S/4HANA Migration: during

Now the project begins. Let’s look at the important part the data and non-production landscape plays in the project itself.

During: sandbox

SAP recommends a sandbox first before your project starts in full. Read more about what happens in that sandbox here. The more accurate the sandbox is the better, but the bigger the system will be and the larger the HANA appliance. Use DSM to build a lean, dedicated sandbox for the project and consider the cloud, given that the duration of the sandbox project is not clear and the business has to be supported in the meantime. Here is how Ballance Agri used DSM in their sandbox phase of S/4HANA.

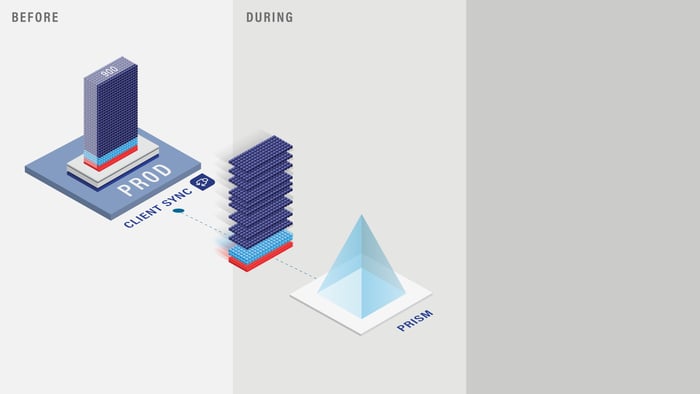

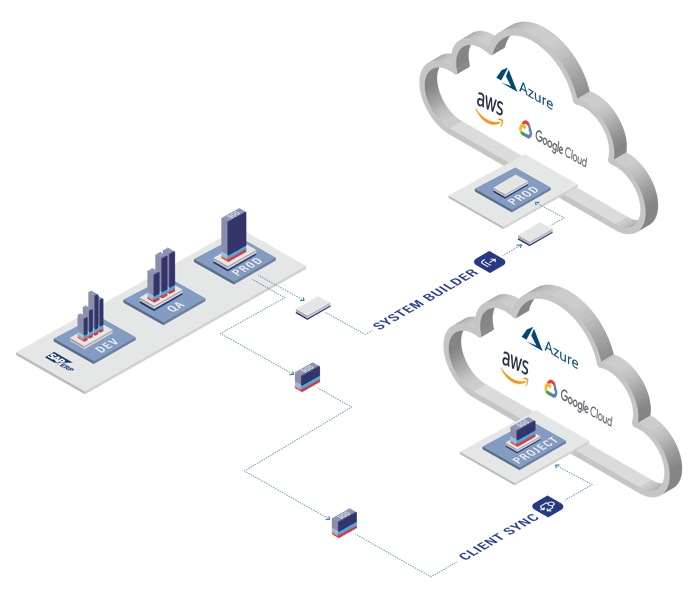

During: rebuild non-production from new production

For anyone taking the brownfield approach, I would recommend considering how wide the gap is between the configuration, customisation and code in the development system and production. Over the years, that gap has got wider and wider with old Z-code abandoned, configuration taken to QA and then the project cancelled, and even third-party add-ons loaded in development but never uninstalled. Our migration teams use DSM to rebuild a new non-production landscape from the production system, as part of their cloud migration strategy. This could also be part of your S/4 migration approach too.

Rebuilding development and QA from production means a cleaner start on the other side, with a smaller gap between development and production, so less chance of defects sneaking through. Some organisations have even done this with full copies as part of their migration, then discovered the costs on the other side when it's already too late. The reduction in the volume of custom code to be refactored is a strong driver for this. Using DSM to build out smaller new test and development systems can bring the same advantage in closing the gap between development and production, and reducing the amount of Z-code to rework, but at a fraction of the cost, since smaller appliances can be used.

During: enabling hybrid cloud options

With the ability to mask data on exit, you can also consider keeping a production environment on-premises and moving all your non-production systems to the cloud. Those are the systems that can benefit most from the elasticity of the cloud resources. Power up the systems during key project phases; switch them off when they’re not required. With Object Sync and Client Sync keeping test data up to date, there is no real person or sensitive data leaving your network.

4. S/4HANA Migration: after

After: TDMS not supported on S/4

Once your S/4 dreams have been realised, this will not be the end of the journey. The functional teams will want to embrace S/4 as the digital core to the intelligent enterprise. There are likely to be many follow-on projects leveraging the possibilities of AI, machine learning and more as your business looks to find, or keep, the competitive edge. All of those projects will need accurate test data and an agile non-production landscape. The SAP offering for Test Data Management, which led many of our customers to find DSM in the first place, does not support S/4. From the changes we’ve made to our architecture over the last four years to handle new technologies used by S/4, I can understand that. DSM is used on S/4, is supported on S/4 and is certified on S/4.

After: landscape agility for continuous improvement

To bridge the gap from ERP systems to the new S/4HANA world, SAP introduced compatibility packs; the principle being that some existing processes would be allowed to continue on the S/4HANA base, but only for a limited period. Whereas the ERP systems left behind have standard maintenance until 2027 (at the time of writing), the compatibility packs should all have alternative options by 2023, and are no longer supported from the end of 2025. So, the first continuous improvement may just be paying off some debts from the journey. For more information on compatibility packs check SAP Note 2269324 or this FAQ.

After each on-premises release, there are then two feature packs that can be installed (optionally). This is where any new capability the business may want comes from first. But once the next on-premises release is available, then the subsequent feature packs are no longer available. Meaning...it’s time to upgrade again, although S/4HANA to S/4HANA upgrades will be easier with additional capabilities for zero downtime being introduced.

Up until the end of 2022, the support for S/4HANA versions was five years, and on-premise versions were released each year, as per the diagram above. With the release of the S/4HANA 2022 version, SAP announced that 2023 would be a ‘go-to’ version, and then they would have two years between future versions, meaning the next on-premise release will be S/4HANA 2025. And the standard support for 1709 and 1809 would be continued beyond their original expiry dates to last until 2025.

All this change needs change management, testing, validation and iteration; all the things that require agile, fit-for-purpose test environments. Exactly what is needed – how much data, and how much integration to cloud systems – may vary each time. Some projects need to fail fast, ideally with accurate data in development systems, which Object Sync can provide without risking the integrity of the development clients. Some projects may need cut-down, standalone systems in the cloud, and some additional clients in existing systems. This, again, is where DSM can help.

After: keep test systems in the same appliance, even as production grows

As your SAP system accelerates away at the other side of the S/4 migration, its footprint will undoubtedly grow. Appliances will need to scale up, or scale out, but your non-production landscape may not need to. With careful planning and use of DSM Client Sync, you can keep smaller test appliances up to date with subsets from production, meaning the rate of growth of the test systems is far smaller than that of production. Thus, the size of the appliance can remain the same as production grows. The Client Sync engine allows technical teams very high-level choices, like how many months of transactions to include, or which company codes you wish to include (Enterprise slice) in the test client but then strictly ensures what is created is consistent and reliable. But, by limiting the test system to only x months of transactions, each time it is refreshed from production, the size will only increase if the volume of transactions in the last x months is higher than the volume in the previous x months when the test system was last refreshed or, of course, if the master data has grown significantly. It also excludes (by default but this can be changed) typical causes of system growth that aren’t needed in a test system, for example, Workflow history and Change Documents. The end result is that, despite potential double-digit percentage growth of the production S/4HANA system, the non-production systems can grow at a negligible rate. And, if a test system should start heading towards the ceiling of its appliance or a t-shirt sizing for hardware with a Hyperscaler, then you can also squeeze the selection with slightly less transactional history or the exclusion of company codes that aren’t required/beneficial for testing. This provides much more control of the hardware costs in S/4HANA, and the flexibility to align costs with budget cycles more effectively.

Interested in help with your S/4 migration planning?

Our S/4 assessment report can give high-level insight into the likely levels of effort of each approach, and early warning of any considerable blocks that may await:

Number of customers/vendors without BPs linked

Technical blockers such as Non-Unicode system

Areas of SAP used by your system which are no longer supported

Visual Interactive Dashboard of the system to frame up internal conversations

Amount of custom code

5. Landscape and Test Data Management requirements in an S/4HANA world

Once the migration is completed and stabilised, there will be a massive collective drawing of breath. But there are reasons you’ve made this investment. For some, the project was IT driven and typically a brownfield migration; for others, the business was the main motivator, and this may well have dictated a greenfield approach.

In this next section, we’re going to look at things that may already be in play for a greenfield S/4HANA project, but might be the next steps for an organisation that went brownfield. We can sum this up in one word - ‘cloud’. The original success of SAP as the enterprise resource planning (ERP) software was partly because it provided seamless integration across all areas of ERP. Organisations forwent the ‘best of breed’ options in each area for the consistent look and feel and integration of SAP. It also allowed the building of teams with technical and functional skills that could easily cross-skill in multiple business areas. Those cross-functional skills are now reaching retirement age at the same time as the expectations of the new entrants into the workplace look in horror, shock or amusement at the traditional SAP GUI and slightly dated processes. So, the ERP system that’s either being replaced or migrated is seeing its role change dramatically too. SAP talks about S/4HANA being the digital core. By that, they mean the single source of truth, and reliable steady system which all the surrounding systems will feed financial information back into. The functions that were previously integrated are moving to dedicated cloud solutions in a new era where APIs and connectivity are standardised and just simply expected by everyone. ‘Best of breed’ is back with a vengeance.

Embracing cloud

Let‘s start by looking at why there are likely to be integrations with other SAP solutions which are not on-premises. There are three main reasons why organisations are embracing what I refer to as ‘pure cloud’ solutions (as opposed to on-premises solutions being hosted in a public or private cloud).

Business demands

Over my career, the relationship between the business and IT teams has changed massively. When I first started out (and yes, it was this millennium, but only by months), the IT Director called the shots. She or he chose the software, the Systems Integrator and so on, and only called on the business for design workshops and User Acceptance Testing. The business didn’t have its own server room; they didn’t have the knowledge to set up hardware and install software. To put it bluntly ‒ they were a captive audience trapped by security rules, lack of technical knowledge and, in some cases in my experience, outright fear of technological advance.

Now, the business can go out and procure a solution in the cloud and completely blindside the IT department. I’ve heard many cases of that happening. The ability provided by ‘pure cloud’ to allow businesses to drive has also given them a much stronger hand. Now, the CFO, COO and other senior people in an organisation are looking at what the latest technology is to try to give them the edge and, if that is Salesforce or Workday or whatever it may be, they want to be using it. The process of moving to those solutions may not be quite as easy as they are led to believe, and the compromise that gets thrown up is, “Let‘s move to S/4HANA but take SAP’s alternative to Salesforce and Workday so the integration is easier (or even consider RISE with SAP and have one throat to choke), and move significant business processes from our legacy SAP ERP system to the cloud, and then integrate back to the digital core.”

Cost models

Anyone responsible for a budget likes to have predictable repeating costs which allow them to plan. The variable costs of housing an ABAP-stack SAP system on a Hyperscaler cloud platform, or even hosting your own servers and paying for hardware, system admins and network admins are not as desirable. By slowly moving everything to a subscription model and paying for the service, not the consumption, it becomes much easier to budget and plan. Any major variation in the cost will come with a variation in the service which can easily be passed on to the business.

Functionality

Some current functionality in any given SAP ERP system may no longer be supported in S/4HANA, or may be supported until 2025 via compatibility packs. This may lead to a consideration of an alternative process in one of the cloud solutions, which could then bring more processes into potential scope for moving to the cloud. Allied to the first point here – the capability to leverage the latest technology, such as machine learning and AI, is easier to access from the cloud. Similarly, the impression given is that analytics across the enterprise will be easier from the pure cloud solutions.

Rethinking the role of the ERP system

The weight of those drivers will mean that over time, more and more processes will find new homes in the cloud. This means the SAP system becomes the backbone and needs to be incredibly stable as the source of financial truth for the enterprise.

It will also need to be available whenever a satellite solution calls, and the integration must be robust. Any change to the backbone for one linked system will need to be analysed for potential impacts for any data flows coming in and out from all the others. Fortunately, SAP ABAP stack systems have a tried and tested change management system which ensures reliability of the production system, and visibility of the journey of a change through that system. How companies use that functionality does vary: some favour release cycles where a pre-production acts as a sealed testing area for a set time before the whole release can flow to production; others have parallel development tracks for projects compared to business as usual (BAU). Smaller companies typically run BAU via a ‘change committee’ or ‘steering board’ and handle projects separately through the same track. The best approach for SAP ERP for a particular organisation may not be the best approach in S/4HANA, particularly where there are new integrations to cloud solutions. The reason for this is the release cycles of pure cloud solutions.

In 2019, these nine cloud products had a combined 17 unique weekends in which they released impactful productive code to customers:

- SAP Ariba

- SAP C/4HANA

- SAP Concur

- SAP Digital Supply Chain

- SAP Fieldglass

- SAP S/4HANA Cloud

- SAP Analytics Cloud

- SAP Cloud Platform

- SAP SuccessFactors

Source: https://news.sap.com/2019/12/sap-release-dates-cloud-harmony/

There are two big challenges here for system stability:

- The company can’t adjust the date of the update; in the ‘old world’, the company chose when to apply upgrades or support packs and had some leeway in the exact timings.

- Two systems which talk to each other, both receiving updates on different weekends, can mean a period of time where their integration is broken, either directly with each other or via their integration to the digital core.

SAP did recognise this, and moved to four maintenance weekends for those solutions in 2020, but customers themselves might want to consider when they patch their S/4HANA system to align with cloud updates. But it may go further than that. Organisations which didn’t previously use a ‘Release’ approach for change management in SAP might now wish to adopt one and use the ‘preview’ periods in cloud solutions to run ‘end-to-end’ testing before everything goes live in conjunction with cloud solutions. This is not a trivial change to make but, with so much changing as part of the S/4HANA journey, it makes sense to consider adopting this as part of the project. If a change to a ‘Release’ approach is planned then the current refresh strategy of non-production SAP systems should also be reviewed. The timing of ‘copy backs’, either using traditional full copies or using a System/Client subset technology, like Client Sync, might need to change accordingly. For most organisations, it means refreshing the system where the release testing will happen just before all the changes are applied, so this becomes a strictly timed, planned and communicated periodic activity. And it needs to be bulletproof in ‘expected duration versus actual duration’.

Change management and test data availability

Aligning release cycles and schedules is all well and good, but what about the mechanism for controlling change through the landscape? And what options are there for provisioning data which is representative of how it will be in production when the change gets there? In S/4HANA, we have the same capabilities that have always been there, both standard SAP and third party for both. SAP Transport Management System and Client/System copies respectively each have third-party alternatives that sit inside or integrate with ABAP. But what about some of these ‘pure cloud’ solutions? In this next section, we’ll look at some examples. Unfortunately, a definitive list would be an ever-changing discussion and become out of date soon after publishing. And in your world, the cloud solutions could be from an almost infinite list of non-SAP owned solutions. I will provide high-level analysis on some of the SAP-owned cloud solutions and hopefully give you a steer in what to consider when carrying out your own research on the exact technologies your project is adopting to orbit the S/4HANA digital core.

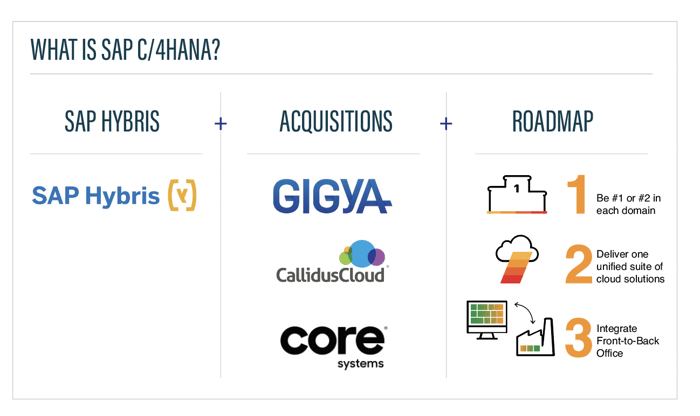

C/4HANA: What is C/4HANA?

Firstly, we need to define exactly what C/4HANA is.

This excellent blog from Gavin Mooney explains the names and origins of the five solutions which C/4HANA comprises: SAP Customer Data Cloud (based on Gigya), SAP Marketing Cloud (previously Hybris Marketing Cloud), SAP Commerce Cloud (Hybris commerce cloud), SAP Sales Cloud (combination of SAP Hybris Cloud for Sales, SAP Subscription Billing and CallidusCloud), SAP Service Cloud (Hybris Cloud for Service, SAP Customer Engagement Center and others).

The last two are what was previously packaged by SAP as C4C. Also worth noting: only the last two (SAP Sales Cloud and SAP Service Cloud) aren’t available on-premises.

C/4HANA: Change management

With the multitude of solutions, it’s not surprising that we need to break this down in to different sets:

SAP Sales and Service Cloud

There are two capabilities available via the Service Control Workcenter. The first ‘Copy solution profile’ allows you to copy all the Business Configurations from a source to a target tenant. This would typically be done at the start of a C/4HANA project to provide a testing environment. There are plenty of activities required during a C/4HANA implementation that are not included in the Business Configuration work centre and previously had to be manually repeated in each tenant. These are now covered by the second capability: Transport Management. From the naming conventions used, it‘s quite clear where this idea came from! Transport routes are created and then Transport requests can be created for Adaptation Changes, Business Roles, Language Adaptations, Local Form Templates and Mashups.

SAP Customer Data Cloud

The Gigya Console provides the option for Copy Configuration, but this is typically used between two production ‘sites’ (sites being the term used rather than ‘tenant’). Customer Data Cloud is much more of a black box. You develop outside the solution on other platforms that leverage APIs to integrate with it. There simply isn’t the same concept of test systems and change management. There is more information available here.

SAP Marketing Cloud

The SAP Help Portal for SAP Marketing Cloud provides a Solution Life Cycle Overview (SAP log in required) which explains how a two-system landscape is typically used. A Quality system receives all the data and is configured before being copied to production for the go-live. The Segmentation and target group configuration, as well as the Extensibility Objects (custom fields, custom logic and so on), still have to be manually imported into production using the ‘Manage your Solution’ app. Ongoing changes for Segmentation and target group configuration and Extensibility Objects continue to be managed the same way after go-live. All other configuration changes are done in Quality and then transported, by leveraging the ‘Manage your Solution’ app to create a service request ticket. When this request is processed the entire configuration moves from Quality to Production, not individual changes.

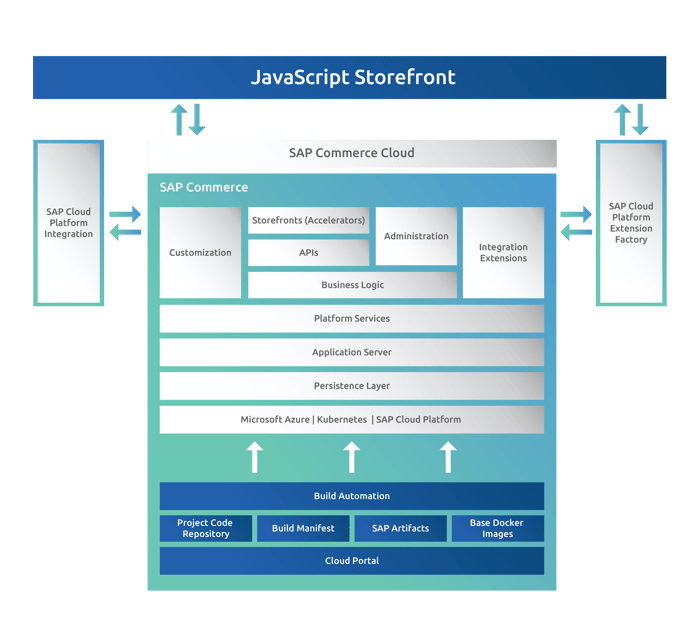

The public cloud edition of SAP Commerce runs in Azure, leveraging Kubernetes and the SAP Cloud Platform.

Extensions are used to manage additional functionality. Some are delivered by SAP, but custom extensions can also be made. An extension generator system called extgen creates new extensions for you to work from, based on extension templates. The configuration is carried out via code and continuous delivery/continuous integration using code from a Git repository is recommended. The build and deployment of packages can be automated using APIs and third-party tools. Read more about the SAP Commerce Cloud architecture.

C/4HANA: Test data provisioning

The proliferation of multiple underlying platforms also means the test data provisioning capabilities differ greatly. SAP Sales Cloud and SAP Service Cloud both have a ‘Tenant refresh’ procedure to request the entire system and data can be copied either from production to Test or one Test tenant to another. The process is managed by logging an incident from within the solution. SAP Note 2751824 provides more detail.

SAP Customer Data Cloud doesn’t have the same concept of test instances or tenants, with the only configuration being done outside of the solution to call APIs that it provides.

The SAP Marketing Cloud has a two-tier architecture and all configuration made in the test system goes to production with each deployment. The data in the test environment is pulled from corresponding test systems such as S/4HANA Dev/test clients. Multiple Segmentation Profiles can be used to take data from more than one S/4HANA Dev/test system into SAP Marketing Cloud. This means the test tenant does not need to be refreshed from production but there is a dependence on having good test data in the linked system’s test environment.

SAP SuccessFactors

In late 2011, SAP acquired SuccessFactors which, at the time, was then the second largest cloud-based software solution next to Salesforce.com, with more than 6,000 customers and over 32 million users in 60 industries in over 185 countries.

In 2012, SAP announced to its approximately 13,000 on-premises SAP HCM customers a planned movement to the cloud, and a planned future cessation of its on-premises HCM applications, including Payroll. When initially announced, customers were encouraged to journey to the cloud, adopting the SAP SuccessFactors cloud versions of the HCM solutions.

Since the acquisition, SAP has adjusted the options available for customers as to their journey roadmap and how fast they need to move to the cloud, as detailed in our Ultimate Guide: SAP HCM & Payroll Options for Existing Customers. The SuccessFactors cloud technology follows a different methodology from the traditional on-premises SAP change management Transport style Dev, QA, Production workbench update model, and rather encourages standard configuration versus a highly-customised system. For those customers who wish to extend the solution, they are encouraged to leverage the Metadata Framework.

SuccessFactors: Change Management

The Metadata Framework (MDF) is how SuccessFactors provides extensibility. It allows functional users, such as business analysts, to configure objects and business rules with very little technical knowledge. These extensions are automatically supported by the OData API for reading and sending data. When standard objects are edited, they are moved to replacement MDF versions. Picklist entries determine available values for fields and these can also be maintained by non-technical users. Some of these settings could be migrated from development instances to production ones via the Instance Sync utility but not all, and the process was cumbersome

Read this blog from my colleague Danielle Larocca to discover how this is now being improved with the new Configuration Centre which is much more similar to the Transport Management System in the ABAP stack. Many customers will already have significant misalignment between development, test, preview and production instances and adoption of the Configuration Centre with effective change management processes will need to go hand in hand with a clean-up and alignment project.

SuccessFactors: test data provisioning

The Instance Refresh utility is available for refreshing test instances from production or from another test instance. For small- and medium-sized instances, an automatic scheduling capability has been introduced, but for larger instances it is still maintained by ticket. Where SuccessFactors replicates to an Employee Central Payroll (ECP) system or backend SAP system for Finance and potentially Payroll, that backend system will normally be refreshed in line with the SuccessFactors Instance Refresh. Due to the extreme sensitivity of the data involved, that does throw up the need for masking or scrambling of the data. When investigating this, it‘s important to realise the replication process does not change data in all the ABAP stack tables (for example, PCL2, REGUH), so a masking solution like Data Secure™ may be needed which can consistently mask SuccessFactors and the backend system before they are released to users.

For more information on provisioning test data for SuccessFactors, please see the white paper: Understand the Configuration Center‘s role in SuccessFactors Instance Management, co-authored with my colleague Danielle Larocca.

How can you support heightened test data requirements in the S/4HANA world?

Clearly, the role of the ERP system is changing with the move to S/4HANA, with it now being the digital core of the Intelligent Enterprise. And with that change in role, there will be very different demands on the S/4 landscape in terms of providing the right data and configuration for integration testing with these different peripheral cloud solutions. Agility for your Test Data Management solutions and processes will become ever more important, while all the time the clock-speed of change will be getting quicker and quicker as all the new capabilities of the SAP Business Technology Platform (BTP) drive innovation in your SAP estate. Find out how our Data Sync Manager (DSM) suite can help in this on-demand webinar recording.

Read mini ebook: Landscape and Test Data Management requirements in an S/4HANA world

6. What about legacy SAP data?

This exciting new world of SAP beckons us and our ageing SAP systems but we can’t simply reinvent our technology enterprise with no thought to the past. Our responsibility for effective, secure management of data extends beyond just the current and future data of the organisation. Every industry has a requirement to keep financial and staffing records for a specific period of time, but many industries have other compliance regulations that affect the retention of historical records (for example, FMCG or pharmaceuticals needing exact batch information for anything shipped from their sites). There are also geographical differences that can affect how long any data is kept, China and Austria being two examples where local laws override globally-influencing legislation like GDPR (the European data protection regulation which has acted as a gold standard for data privacy law changes over recent years).

The journey your SAP system is taking could well result in the need to decommission systems, in the case of greenfield projects, or data in the case of selective transitions, where not everything will go to S/4HANA. Where you decide to retain that data will be nuanced by legislation, like GDPR, which requires any IT project to have ‘data protection by design’ and may well result in the need to redact or remove some aspects of the data at the point it’s decommissioned and then further redact and/or remove data as it reaches retention periods specified by the organisation’s legal team. The default approach of ‘display-only SAP systems’ is not fit for purpose in the modern world of technology and data privacy. For more information on why a display-only system is likely not the best choice, please check out this blog.

The requirements for the historical data are typically:

- Compliance

- Where the data may or may not ever actually be viewed and, therefore, the format doesn’t matter too much.

- Queries

- Inquiries will be made and specific accounting or logistic documents might need to be referenced to answer the questions. Here the format needs to be easy to use but doesn’t need to exactly match what was previously in SAP.

- Auditor access

- The format may not need to match what was in SAP but may be specifically defined by the auditors or authorities responsible. And this can vary from country to country and have industry flavours too.

- Reproduction of documents

- Payslips, invoices or other documents issued to employees or business partners may need to be reproduced at a later time.

Structured data

The first three requirements are typically delivered via what is referred to as Structured data. This means the information needed is stored in table entries in SAP and those table entries need to be extracted and made available for search and retrieval. The complexity with SAP and structured data is the depth of the data model. A single instance of a Material Master, for example, could contain entries from 30 or 40 plus tables and hundreds of rows in total. The archive solution needs to not only store the rows but the linkages between them. The other challenge with SAP is the relationships between different data objects. For example, this might be where a Sales Order inherits data from the Customer master to provide the Ship to or Sold to. This means the archive must either duplicate data from the Customer with the stored Sales Orders or also be aware of linkages between different data keys. With SAP containing over 60,000 tables, this is not trivial.

Unstructured data

Unstructured data typically is associated with a key of one of these data object types but is not row- and column-based data, often being an image or PDF stored in the SAP system or, in the case of payslips and some documents generated via SAP forms, dynamically produced from the structured data, as and when required. This is the challenge for the ‘Reproduction of documents’ use case. Everything that may be required will need to be generated and stored in the archive. As well as the 60,000 tables, an SAP system contains over 400,000 programs which apply logic to the table data for a variety of functions. This logic cannot be replicated in an archive, meaning that anything that has to be calculated from the structured data must be done before the SAP system is switched off and the data stored as static data.

Dynamic structured data

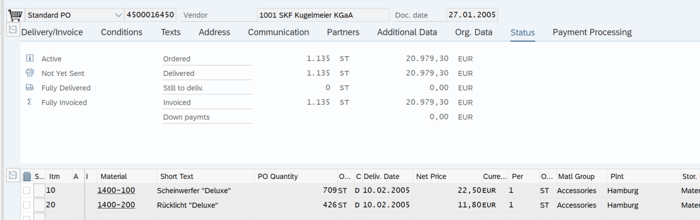

This leads us to another challenge. When the business users verify structured data in an archive, you can then be blindsided by what I call ‘Dynamic structured data’. For example, in the Purchase Order display transaction (ME23N), there is a significant amount of structured data which can be extracted by SQL selections on the tables.

There is then a ‘Status’ tab which is dynamically calculated when viewed, combining data from the items, system configuration and texts to display the current status. Now at the point of decommissioning the system, this will of course be a fixed final status but can your extraction take these calculated values to store as structured data or does this bleed over to be an additional unstructured data requirement? This could be one of many dynamic structured situations and the more these can be combined into structured data, the more efficient the archive will be.

In addition to the programs that leverage and combine the structured data, there are also the screens of SAP. At the very outset of a decommissioning project, it is essential to set the expectations that the provided solution will not look like SAP GUI. I tend to think of some power users in SAP as having Stockholm Syndrome when it comes to the SAP GUI. The screenshot above with its 8-bit icon image to show ‘Information’ or ‘Transported’ does look rather laughable in the modern era but the muscle memory of 20 years of working with SAP GUI does override such aesthetics for many users and they will need gently coaxing away from their captor! Where they will have an irrefutable point though is where the SAP screens leverage a data domain or separate text table to convert alphabetical or numerical values into a description (for example, wage types, order types). So, the archive solution will have to be able to show not just /101 or NB but also, or alternatively, ‘Gross Pay’ or ‘Standard PO’ respectively.

The recommendation I would like to make overall is do not underestimate the challenge of effectively decommissioning a legacy SAP system, or even data subsets from SAP. It will require planning, collaboration and the right technology to ease the way. Otherwise, you could find a privacy or technology challenge being thrown up by your system, long after it has provided any great business value to the organisation.

Explore case studies from satisfied clients

EPI-USE Labs sparks off S/4HANA for Aberdare

"EPI-USE Labs helped us implement our SAP roadmap, and the team is always available when we need them. We achieved upgrades into the HANA space within short timelines and at low costs; the migration was seamless with no interruptions in work. We now have efficient technical support and effective IT management tools."

Raven Mahabeer, General Manager: Information Management at Aberdare Cables

Dis-Chem improves system health with a cost-effective migration to HANA

"The EPI-USE Labs and First Technology teams delivered the project in record time with flying colours. The migration was seamless, and they overcame all the technical barriers with ease. Their communication was very good throughout; they kept our stakeholders well-informed, and aligned expectations. And they’ve continued to provide us with excellent support."

Kim Sim, Chief Information and Innovation Officer, Dis-Chem Pharmacies

Purdue University transforms the future with S/4HANA

"We could not be more pleased with the initial outcomes of Finance transformation and our partnership with EPI-USE and EPI-USE Labs. Together we were able to take a vision for simplified finance structures and processes and build technology that significantly enhances our financial transparency, while creating automated, efficient and paperless workflow."

Timothy Werth, Senior Director, Business Process Re-engineering, Purdue University